29 Jul 2025

**Update 2nd September 2025**

We successfully completed the migration of our main server to a new Proxmox cluster last night at 20:00 UTC. We are happy to say that everything is up and running smoothly and we have noticed a significant improvement in performance.

The new IP address is 5.101.170.45 and for those of you who whitelist our IP addresses you can safely delete the old IP address from your whitelist.

The migration was successful but not entirely straightforward. There were a couple of false starts when the snapshots used for the transfer were corrupted and we ended up having to pause the old server for around an hour in order to create a stable snapshot.

We would like to give a big thank you to all our Pro users for helping us keep the lights on!

Upgrade To Proxmox

We're upgrading Downtime Monkey's main server to a Proxmox-based cloud cluster on 1st September, 2025 at 3:00 AM UTC.

There are several benefits to using Proxmox, including speed and efficiency, but the main advantage is the ease of redundancy - if one server has an issue, another can instantly take over.

New IP Address: 5.101.170.45

For most users no action is needed - monitoring will continue as before the migration.

However, if you actively whitelist our IP address, please add the new one to your whitelist in advance of September 1st.

After the migration, you can safely remove the old IP address (217.146.95.83) from your whitelist.

This upgrade is all about making Downtime Monkey more resilient, and we couldn't do it without the support of our Pro users. Thanks for being part of our journey!

28 Jul 2024

**Update 5th September 2024**

A further server migration was completed today. Although the previous server worked fine their were some performance aspects that we were not happy with so it was decided that another change was best.

The transition took place today at around 10.45 UTC with approximately 10 minutes of downtime. There was also a short period when emails did not sent. We are now running on the new server and the IP address of remains the same as before.

**Update 1st August 2024**

The upgrade to the new main server completed successfully, beginning on Monday morning a little later than expected at around 10am UTC.

The transition was smooth with just a few minutes of downtime and we are now running on the new server. Note the IP address of the new server remains the same as before.

A big thank you to everyone who has signed-up recently and especially to our Pro users who keep the lights on - we really appreciate it!

An upgrade of Downtime Monkey's main server is scheduled to take place on Monday 29th July at approximately 9am UTC. The old server has served us well, but has now become a little long in the tooth, so we will be migrating to a new server.

There will be a short period (of approximately 15 minutes) when the website may be offline while the migration takes place. We hate downtime but in this situation it's unavoidable.

The good news is that all Downtime Monkey customers will enjoy the benefits of the updated server in the years to come.

Thanks again and looking forward to updating this post from the shiny new server!

03 Jun 2024

**Update 6th June 2024** Issue resolved with viewing stats

Yesterday we fixed the issue where some users couldn't view their monitoring stats. Stats can now be seen by all users.

For some monitors, response times between late on 4th June and early on 6th June are missing from the stats but other than this all stats can be viewed as normal.

**Update 4th June 2024** Database upgrade maintenance was completed today.

The upgrade to the database on our main server took place today as scheduled and was completed with less than 15 minutes downtime.

We are aware of one post-update issue where some users are unable to view the stats of their monitors - note that all monitoring and alerting is functioning correctly. We are working on a fix and will keep you informed of progress here.

The Downtime Monkey main server is scheduled to upgrade its database software to the latest version on 4th June 2024 at 9am UTC.

The update is necessary for keeping the Downtime Monkey database secure, reliable and fast over the coming years. We will update this post when the upgrade has been concluded.

13 Feb 2022

**UPDATE **

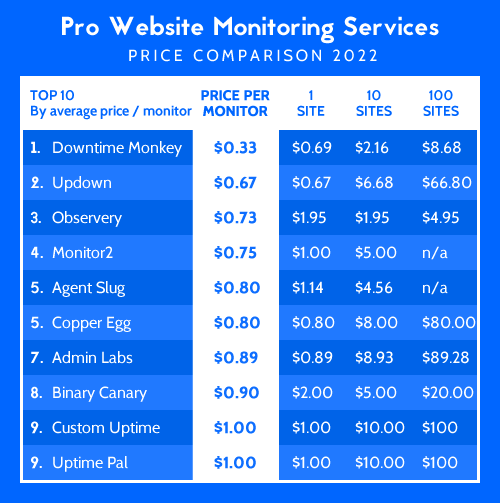

The comparison for 2022 has been completed. You can skip straight to the results for 2022, view the results for 2021, 2020 & 2019, or read on to see how we ranked the sites...

We compared every available website monitoring service by price. A comparison of each service was made by:

1) the price of the cheapest Pro plan

2) the price to monitor 10 websites

3) the price to monitor 100 websites

We then calculated the average price per monitor which we used for the rankings.

Ranking by Average Price Per Monitor

In the past (2021 and before) sites were ranked according to their cheapest available Pro plan. This worked fine but we noticed that it favoured services that have a fixed price per monitor. These are usually cheap if you want to monitor a single site but quickly become expensive as the number of monitors increases.

This year we have calculated the average price per monitor by simply dividing each price by number of monitors and then taking the average. This gives a more balanced ranking which factors in both bigger and smaller plans.

We haven't included Free plans in the comparison, however here is a feature comparison of Free vs Pro plans which shows the kind of features to expect from a decent Pro plan.

US dollars were used for the comparison. For sites that only accept other currencies, prices were converted by the exchange rate on Google Finance on 08 Feb 2022.

Where a cheaper price was available by paying yearly, the annual cost divided by 12 was used for comparison.

Price, Features & Quality

This is a price comparison article.

However... it is worth remembering that price is not the only factor to take into account when choosing a website monitoring service (or any service).

Features and quality are also important. We haven't reviewed these here because feature reviews can be very subjective. Since we provide one of the website monitoring services in the comparison it is vital that the results are unbiased. Therefore we chose a measure that was hard to argue with - price.

That being said we did notice that the price of the service seems to be totally uncorrelated to the quality and features provided. Some of the cheapest services provide higher quality than other more expensive competitors. On the flip side, some of the cheap options are very poor indeed!

But we'll let you decide on quality yourselves... here are our website monitoring plans including the full list of features.

Below are the top 10 sites or skip straight to the full list...

Which Monitoring Sites Were Included?

Initially we viewed every website monitoring service available online - there were 202 at the time of writing!

We excluded very expensive services, sites that clearly had problems and any monitoring software that needed installation on your server. This reduced the number of sites considerably - 41 monitoring services made it through to the final results.

Cheap Monitoring Only

Sites with a cheapest plan costing $10/month or more were excluded since they can't be considered low-price.

Free plans were not included because we wanted to compare applications with Pro features.

Also excluded were "Pro" plans that don't provide anything more than the average Free plan. For example, "Pro" plans with monitoring intervals of greater than 1 minute, with alerts only available via email or with monitoring from only one location were not included.

Sites which don't provide prices on their website or which require the customer to contact them to receive a price weren't included either.

Working Sites Only

We excluded a few sites that clearly have problems: if the site didn't load, the SSL certificate was invalid or Google warned us that it contained malware then we didn't include it.

Also, we didn't include sites that are in Beta testing.

External Monitoring Services Only

This comparison is for external monitoring services, so we didn't include software that requires installation on the customer's server.

2022 Results

Again, some interesting trends in the website monitoring space were illuminated by this year's results...

Even More Monitoring Services: the total number of website monitoring services has continued to increase despite several services shutting up shop: 158 in 2019, 170 in 2020, 185 in 2021 and 202 in 2022.

But Fewer Quality Services: the total number of services that made the final results decreased from 55 in 2019 to 41 in 2020, recovered slightly to 46 in 2021 and returned to the low point of 41 in 2022. The main reason for this trend is an increased number of new services that offer paid plans with only free plan features.

Prices Largely Unchanged: following large price increases in 2020 and moderate increases in 2021 it was interesting to see that prices remain largely unchanged across the industry this year.

Monitoring Service

Avg Cost/Mon

1 Monitor

10 Monitors

100 Monitors

Cur.

1) Downtime Monkey

Average Cost/Mon: $0.33

1 Monitor: $0.69

10 Monitors: $2.16

100 Monitors: $8.68

Currencies: $€+120

2) Updown

Average Cost/Mon: $0.67

1 Monitor: $0.67

10 Monitors: $6.68

100 Monitors: $66.80

Currencies: €₿

3) Observery

Average Cost/Mon: $0.73

1 Monitor: $1.95

10 Monitors: $1.95

100 Monitors: $4.95

Currencies: $

4) Monitor2

Average Cost/Mon: $0.75

1 Monitor: $1.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

5) Agent Slug

Average Cost/Mon: $0.80

1 Monitor: $1.14

10 Monitors: $4.56

100 Monitors: n/a

Currencies: $

6) Copper Egg

Average Cost/Mon: $0.80

1 Monitor: $0.80

10 Monitors: $8.00

100 Monitors: $80.00

Currencies: $

7) Admin Labs

Average Cost/Mon: $0.89

1 Monitor: $0.89

10 Monitors: $8.93

100 Monitors: $89.28

Currencies: $

8) Binary Canary

Average Cost/Mon: $0.90

1 Monitor: $2.00

10 Monitors: $5.00

100 Monitors: $20.00

Currencies: $

9) Custom Uptime

Average Cost/Mon: $1.00

1 Monitor: $1.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

10) Uptime Pal

Average Cost/Mon: $1.00

1 Monitor: $1.00

10 Monitors: $10.00

100 Monitors: 100.00

Currencies: $

11) MonTools

Average Cost/Mon: $1.07

1 Monitor: $1.07

10 Monitors: $10.71

100 Monitors: n/a

Currencies: $

12) Cloudsome

Average Cost/Mon: $1.58

1 Monitor: $4.17

10 Monitors: $4.17

100 Monitors: $16.67

Currencies: $

13) Cloud Radar

Average Cost/Mon: $1.70

1 Monitor: $1.70

10 Monitors: $17.00

100 Monitors: $170.00

Currencies: $€

14) Pingerman

Average Cost/Mon: $1.80

1 Monitor: $4.58

10 Monitors: $4.58

100 Monitors: $37.50

Currencies: $

15) API Checker

Average Cost/Mon: $1.84

1 Monitor: $4.58

10 Monitors: $4.58

100 Monitors: $49.00

Currencies: $

16) AppBeat

Average Cost/Mon: $2.11

1 Monitor: $5.70

10 Monitors: $5.70

100 Monitors: $5.70

Currencies: €

17) Cloud Probes

Average Cost/Mon: $2.16

1 Monitor: $3.60

10 Monitors: $7.20

100 Monitors: n/a

Currencies: $

18) Ping Monit

Average Cost/Mon: $2.29

1 Monitor: $4.16

10 Monitors: $4.16

100 Monitors: n/a

Currencies: $

19) Pulse Ping

Average Cost/Mon: $2.30

1 Monitor: $5.99

10 Monitors: $5.99

100 Monitors: $29.99

Currencies: $

20) Uptime Mate

Average Cost/Mon: $2.49

1 Monitor: $5.70

10 Monitors: $10.26

100 Monitors: $74.10

Currencies: €

21) Up Status

Average Cost/Mon: $2.63

1 Monitor: $5.00

10 Monitors: $19.00

100 Monitors: $99.00

Currencies: $

22) Uptime Robot

Average Cost/Mon: $2.64

1 Monitor: $7.00

10 Monitors: $7.00

100 Monitors: $21.00

Currencies: $

23) Uptime Checker

Average Cost/Mon: $2.67

1 Monitor: $6.00

10 Monitors: $15.00

100 Monitors: $50.00

Currencies: $

24) Hitflow

Average Cost/Mon: $2.69

1 Monitor: $6.82

10 Monitors: $6.82

100 Monitors: $56.99

Currencies: €

25) Dev Monitor

Average Cost/Mon: $2.75

1 Monitor: $5.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

26) Pingrely

Average Cost/Mon: $2.76

1 Monitor: $7.08

10 Monitors: $7.08

100 Monitors: $47.92

Currencies: $

27) Uptime 360

Average Cost/Mon: $3.03

1 Monitor: $5.00

10 Monitors: $29.00

100 Monitors: $119.00

Currencies: $

28) Pingniner

Average Cost/Mon: $3.32

1 Monitor: $5.80

10 Monitors: $8.30

100 Monitors: n/a

Currencies: $

29) Observu

Average Cost/Mon: $3.38

1 Monitor: $6.95

10 Monitors: $14.99

100 Monitors: $169.00

Currencies: $

30) Uptimia

Average Cost/Mon: $3.56

1 Monitor: $9.00

10 Monitors: $9.00

100 Monitors: $79

Currencies: $

31) Monitive

Average Cost/Mon: $3.59

1 Monitor: $7.00

10 Monitors: $30.60

100 Monitors: $72.00

Currencies: $

32) Site 24x7

Average Cost/Mon: $3.60

1 Monitor: $9.00

10 Monitors: $9.00

100 Monitors: $89.00

Currencies: $€

33) Super Monitoring

Average Cost/Mon: $3.70

1 Monitor: $4.91

10 Monitors: $24.91

100 Monitors: contact

Currencies: $€

34) Cronitor

Average Cost/Mon: $3.85

1 Monitor: $7.00

10 Monitors: $25.00

100 Monitors: $205.00

Currencies: $

35) YupTimer

Average Cost/Mon: $3.85

1 Monitor: $7.00

10 Monitors: $7.00

100 Monitors: n/a

Currencies: $

36) Pinghut

Average Cost/Mon: $3.99

1 Monitor: $9.00

10 Monitors: $27.00

100 Monitors: $27.00

Currencies: $

37) Net Ping

Average Cost/Mon: $4.04

1 Monitor: $8.00

10 Monitors: $35.00

100 Monitors: $62.00

Currencies: $

38) WebPing

Average Cost/Mon: $4.11

1 Monitor: $8.06

10 Monitors: $19.91

100 Monitors: $226.56

Currencies: PLN

39) Uptime Doctor

Average Cost/Mon: $4.37

1 Monitor: $7.95

10 Monitors: $7.95

100 Monitors: n/a

Currencies: $

40) Are My Sites Up

Average Cost/Mon: $4.37

1 Monitor: $8.00

10 Monitors: $32.00

100 Monitors: $192.00

Currencies: $

41) Alertra

Average Cost/Mon: $5.47

1 Monitor: $9.95

10 Monitors: $9.95

100 Monitors: n/a

Currencies: $

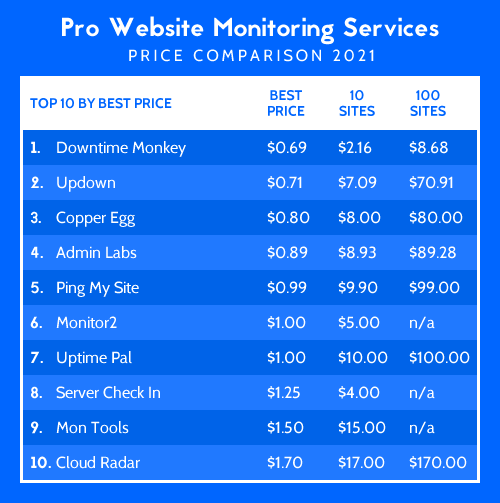

2021 Results

For sites that only accept currencies other than US dollars, prices were converted by the exchange rate on Google Finance on 23rd April 2021.

Monitoring Service

Cheapest Plan

10 Monitors

100 Monitors

Currencies

1) Downtime Monkey

Cheapest Plan: $0.69

10 Monitors: $2.16

100 Monitors: $8.68

Currencies: $, €, £ +120

2) Updown

Cheapest Plan: $0.71

10 Monitors: $7.09

100 Monitors: $70.91

Currencies: €

3) Copper Egg

Cheapest Plan: $0.80

10 Monitors: $8.00

100 Monitors: $80.00

Currencies: $

4) Admin Labs

Cheapest Plan: $0.89

10 Monitors: $8.93

100 Monitors: $89.28

Currencies: $

5) Ping My Site

Cheapest Plan: $0.99

10 Monitors: $9.90

100 Monitors: $99.00

Currencies: $

6) Monitor2

Cheapest Plan: $1.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

7) Uptime Pal

Cheapest Plan: $1.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

8) Server Check In

Cheapest Plan: $1.25

10 Monitors: $4.00

100 Monitors: n/a

Currencies: $

9) Mon Tools

Cheapest Plan: $1.50

10 Monitors: $15.00

100 Monitors: n/a

Currencies: $

10) Cloud Radar

Cheapest Plan: $1.70

10 Monitors: $17.00

100 Monitors: $170.00

Currencies: $, €

11) Observery

Cheapest Plan: $1.95

10 Monitors: $1.95

100 Monitors: $4.95

Currencies: $

12) Vigil

Cheapest Plan: $1.99

10 Monitors: $19.99

100 Monitors: $199.00

Currencies: $

13) Binary Canary

Cheapest Plan: $2.00

10 Monitors: $5.00

100 Monitors: $20.00

Currencies: $

14) Agent Slug

Cheapest Plan: $2.02

10 Monitors: $2.02

100 Monitors: $5.04

Currencies: €

15) Pingr

Cheapest Plan: $2.04

10 Monitors: $11.36

100 Monitors: $104.68

Currencies: $

16) Monitoring Me

Cheapest Plan: $2.36

10 Monitors: $12.89

100 Monitors: n/a

Currencies: £

17) Statusoid

Cheapest Plan: $3.49

10 Monitors: $34.90

100 Monitors: $349.00

Currencies: $

18) Cloud Probes

Cheapest Plan: $3.60

10 Monitors: $7.20

100 Monitors: n/a

Currencies: $

19) Ping Ping

Cheapest Plan: $4.00

10 Monitors: $8.00

100 Monitors: $60.00

Currencies: $

20) Ping Monit

Cheapest Plan: $4.16

10 Monitors: $4.16

100 Monitors: n/a

Currencies: $

21) Super Monitoring

Cheapest Plan: $4.91

10 Monitors: $24.91

100 Monitors: contact

Currencies: €

22) API Checker

Cheapest Plan: $4.99

10 Monitors: $4.99

100 Monitors: $44.91

Currencies: $

23) Dev Monitor

Cheapest Plan: $5.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

24) Monitive

Cheapest Plan: $5.00

10 Monitors: $9.50

100 Monitors: $60.00

Currencies: $

25) Ops Dash

Cheapest Plan: $5.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

26) Up Status

Cheapest Plan: $5.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

27) Uptime 360

Cheapest Plan: $5.00

10 Monitors: $15.00

100 Monitors: n/a

Currencies: $

28) Pulse Ping

Cheapest Plan: $5.99

10 Monitors: $5.99

100 Monitors: $29.99

Currencies: $

29) Uptime Checker

Cheapest Plan: $6.00

10 Monitors: $15.00

100 Monitors: $50.00

Currencies: $

30) App Beat

Cheapest Plan: $6.04

10 Monitors: $6.04

100 Monitors: $60.49

Currencies: €

31) Uptime Mate

Cheapest Plan: $6.05

10 Monitors: $10.89

100 Monitors: $78.65

Currencies: €

32) Server Guard 24

Cheapest Plan: $6.90

10 Monitors: $23.90

100 Monitors: $129.90

Currencies: $

33) Pingniner

Cheapest Plan: $6.95

10 Monitors: $9.95

100 Monitors: n/a

Currencies: $

34) Observu

Cheapest Plan: $6.95

10 Monitors: $14.99

100 Monitors: $169.00

Currencies: $

35) Site 24x7

Cheapest Plan: $7.00

10 Monitors: $7.00

100 Monitors: $71.00

Currencies: $

36) Uptime Robot

Cheapest Plan: $7.00

10 Monitors: $7.00

100 Monitors: $21.00

Currencies: $

37) Yup Timer

Cheapest Plan: $7.00

10 Monitors: $7.00

100 Monitors: n/a

Currencies: $

38) Pingrely

Cheapest Plan: $7.08

10 Monitors: $7.08

100 Monitors: $47.92

Currencies: $

39) Hitflow

Cheapest Plan: $7.24

10 Monitors: $7.24

100 Monitors: $60.48

Currencies: €

40) Uptime Doctor

Cheapest Plan: $7.95

10 Monitors: $7.95

100 Monitors: n/a

Currencies: $

41) Are My Sites Up

Cheapest Plan: $8.00

10 Monitors: $32.00

100 Monitors: $192.00

Currencies: $

42) NodePing

Cheapest Plan: $8.00

10 Monitors: $15.00

100 Monitors: $15.00

Currencies: $

43) Pinometer

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $64.00

Currencies: $

44) Uptimia

Cheapest Plan: $9.00

10 Monitors: $9.00

100 Monitors: $79.00

Currencies: $

45) Status Rocket

Cheapest Plan: $9.00

10 Monitors: $29.00

100 Monitors: $149.00

Currencies: $

46) Alertra

Cheapest Plan: $9.95

10 Monitors: $9.95

100 Monitors: n/a

Currencies: $

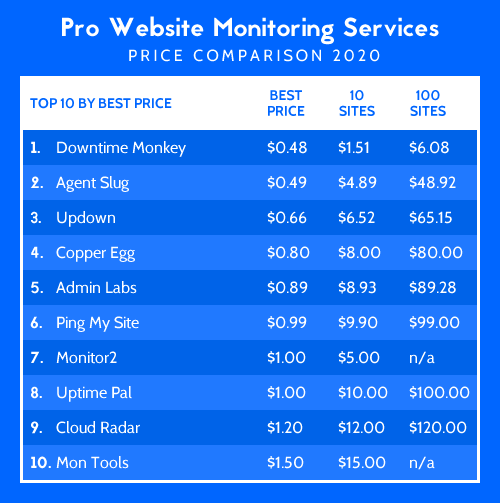

2020 Results

For sites that only accept currencies other than US dollars, prices were converted by the exchange rate on Google Finance on 3rd March 2020.

Monitoring Service

Cheapest Plan

10 Monitors

100 Monitors

Currencies

1) Downtime Monkey

Cheapest Plan: $0.48

10 Monitors: $1.51

100 Monitors: $6.08

Currencies: $, €, £ +120

2) Agent Slug

Cheapest Plan: $0.49

10 Monitors: $4.89

100 Monitors: $48.92

Currencies: €

3) Updown

Cheapest Plan: $0.66

10 Monitors: $6.52

100 Monitors: $65.15

Currencies: €, Ƀ

4) Copper Egg

Cheapest Plan: $0.80

10 Monitors: $8.00

100 Monitors: $80.00

Currencies: $

5) Admin Labs

Cheapest Plan: $0.89

10 Monitors: $8.93

100 Monitors: $89.28

Currencies: $

6) Ping My Site

Cheapest Plan: $0.99

10 Monitors: $9.90

100 Monitors: $99.00

Currencies: $

7) Monitor2

Cheapest Plan: $1.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

8) Uptime Pal

Cheapest Plan: $1.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

9) Cloud Radar

Cheapest Plan: $1.20

10 Monitors: $12.00

100 Monitors: $120.00

Currencies: $, €

10) Mon Tools

Cheapest Plan: $1.50

10 Monitors: $15.00

100 Monitors: n/a

Currencies: $

11) Observery

Cheapest Plan: $1.95

10 Monitors: $1.95

100 Monitors: $4.95

Currencies: $

12) Vigil

Cheapest Plan: $1.99

10 Monitors: $19.99

100 Monitors: $199.00

Currencies: $

13) Binary Canary

Cheapest Plan: $2.00

10 Monitors: $5.00

100 Monitors: $20.00

Currencies: $

14) Monitoring Me

Cheapest Plan: $2.36

10 Monitors: $5.40

100 Monitors: $11.85

Currencies: £

15) Statusoid

Cheapest Plan: $3.49

10 Monitors: $34.90

100 Monitors: $349.00

Currencies: $

16) Cloud Probes

Cheapest Plan: $3.60

10 Monitors: $7.20

100 Monitors: n/a

Currencies: $

17) Ping Monit

Cheapest Plan: $4.16

10 Monitors: $4.16

100 Monitors: n/a

Currencies: $

18) Uptime Robot

Cheapest Plan: $4.50

10 Monitors: $4.50

100 Monitors: $7.40

Currencies: $

19) Super Monitoring

Cheapest Plan: $4.91

10 Monitors: $24.91

100 Monitors: contact

Currencies: €

20) Pingoscope

Cheapest Plan: $4.95

10 Monitors: $9.95

100 Monitors: $9.95

Currencies: $

21) Up Ninja

Cheapest Plan: $4.99

10 Monitors: $4.99

100 Monitors: $24.99

Currencies: $

22) API Checker

Cheapest Plan: $4.99

10 Monitors: $4.99

100 Monitors: $49.99

Currencies: $

23) Online Or Not

Cheapest Plan: $5.00

10 Monitors: $5.00

100 Monitors: $99.00

Currencies: $

24) Ops Dash

Cheapest Plan: $5.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

25) Oh Dear

Cheapest Plan: $5.56

10 Monitors: $22.24

100 Monitors: $111.18

Currencies: €

26) Uptime Checker

Cheapest Plan: $6.00

10 Monitors: $15.00

100 Monitors: $50.00

Currencies: $

27) Hitflow

Cheapest Plan: $6.66

10 Monitors: $6.66

100 Monitors: $55.58

Currencies: €

28) Server Guard 24

Cheapest Plan: $6.90

10 Monitors: $23.90

100 Monitors: $129.90

Currencies: $

29) Pingniner

Cheapest Plan: $6.95

10 Monitors: $9.95

100 Monitors: n/a

Currencies: $

30) Observu

Cheapest Plan: $6.95

10 Monitors: $14.99

100 Monitors: $169.00

Currencies: $

31) Do You Check

Cheapest Plan: $7.00

10 Monitors: $20.00

100 Monitors: n/a

Currencies: $, €, £

32) Yup Timer

Cheapest Plan: $7.00

10 Monitors: $7.00

100 Monitors: n/a

Currencies: $

33) Site 24x7

Cheapest Plan: $7.00

10 Monitors: $7.00

100 Monitors: $71.00

Currencies: $

34) Pingrely

Cheapest Plan: $7.08

10 Monitors: $7.08

100 Monitors: $47.92

Currencies: $

35) Uptime Doctor

Cheapest Plan: $7.95

10 Monitors: $7.95

100 Monitors: n/a

Currencies: $

36) Anturis

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $76.00

Currencies: $

37) Are My Sites Up

Cheapest Plan: $8.00

10 Monitors: $32.00

100 Monitors: $192.00

Currencies: $

38) NodePing

Cheapest Plan: $8.00

10 Monitors: $15.00

100 Monitors: $15.00

Currencies: $

39) Pinometer

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $64.00

Currencies: $

40) Uptimia

Cheapest Plan: $9.00

10 Monitors: $9.00

100 Monitors: $79.00

Currencies: $

41) Alertra

Cheapest Plan: $9.95

10 Monitors: $9.95

100 Monitors: $20.00

Currencies: $

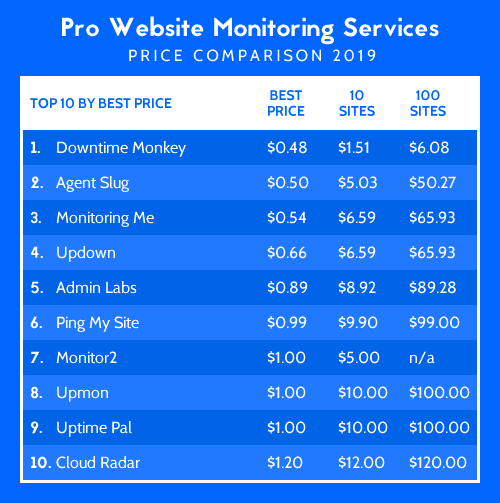

2019 Results

US dollars were used for the comparison. For sites that only accept other currencies, prices were converted by the exchange rate on Google Finance on 5th June 2019.

Monitoring Service

Cheapest Plan

10 Monitors

100 Monitors

Currencies

1) Downtime Monkey

Cheapest Plan: $0.48

10 Monitors: $1.51

100 Monitors: $6.08

Currencies: $, €, £ +120

2) Agent Slug

Cheapest Plan: $0.50

10 Monitors: $5.03

100 Monitors: $50.27

Currencies: €

3) Monitoring Me

Cheapest Plan: $0.54

10 Monitors: $5.40

100 Monitors: $54.00

Currencies: £

4) Updown

Cheapest Plan: $0.66

10 Monitors: $6.59

100 Monitors: $65.93

Currencies: €, Ƀ

5) Admin Labs

Cheapest Plan: $0.89

10 Monitors: $8.92

100 Monitors: $89.28

Currencies: $

6) Ping My Site

Cheapest Plan: $0.99

10 Monitors: $9.90

100 Monitors: $99.00

Currencies: $

7) Monitor2

Cheapest Plan: $1.00

10 Monitors: $5.00

100 Monitors: n/a

Currencies: $

8) Upmon

Cheapest Plan: $1.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

9) Uptime Pal

Cheapest Plan: $1.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

10) Cloud Radar

Cheapest Plan: $1.20

10 Monitors: $12.00

100 Monitors: $120.00

Currencies: $, €

11) Down Notifier

Cheapest Plan: $1.25

10 Monitors: $1.25

100 Monitors: $28.05

Currencies: $

12) MonTools

Cheapest Plan: $1.25

10 Monitors: $10.71

100 Monitors: $107.00

Currencies: $

13) Server Check

Cheapest Plan: $1.25

10 Monitors: $4.00

100 Monitors: n/a

Currencies: $

14) Vigil

Cheapest Plan: $1.99

10 Monitors: $19.90

100 Monitors: $199.00

Currencies: $

15) Statusoid

Cheapest Plan: $2.75

10 Monitors: $14.90

100 Monitors: $136.40

Currencies: $

16) Noty

Cheapest Plan: $3.00

10 Monitors: $10.00

100 Monitors: $10.00

Currencies: $

17) Do You Check

Cheapest Plan: $3.00

10 Monitors: $20.00

100 Monitors: n/a

Currencies: $, €, £

18) Upski

Cheapest Plan: $3.99

10 Monitors: $3.99

100 Monitors: n/a

Currencies: $

19) Ping Monit

Cheapest Plan: $4.16

10 Monitors: $4.16

100 Monitors: n/a

Currencies: $

20) Uptime Robot

Cheapest Plan: $4.50

10 Monitors: $4.50

100 Monitors: $7.40

Currencies: $

21) Port Monitor

Cheapest Plan: $4.54

10 Monitors: $4.54

100 Monitors: $33.29

Currencies: $

22) API Checker

Cheapest Plan: $4.58

10 Monitors: $4.58

100 Monitors: $44.92

Currencies: $

23) Super Monitoring

Cheapest Plan: $4.59

10 Monitors: $24.28

100 Monitors: contact

Currencies: €

24) Pingoscope

Cheapest Plan: $4.95

10 Monitors: $9.95

100 Monitors: $9.95

Currencies: $

25) Got Site Monitor

Cheapest Plan: $4.95

10 Monitors: $9.95

100 Monitors: n/a

Currencies: $

26) Binary Canary

Cheapest Plan: $5.00

10 Monitors: $5.00

100 Monitors: $20.00

Currencies: $

27) R-U-ON

Cheapest Plan: $5.00

10 Monitors: $9.99

100 Monitors: $25.00

Currencies: $

28) OpsDash

Cheapest Plan: $5.00

10 Monitors: $10.00

100 Monitors: $100.00

Currencies: $

29) WebGazer

Cheapest Plan: $5.00

10 Monitors: $15.00

100 Monitors: contact

Currencies: $

30) Oh Dear

Cheapest Plan: $5.17

10 Monitors: $20.68

100 Monitors: $103.36

Currencies: €

31) AppBeat

Cheapest Plan: $5.62

10 Monitors: $5.62

100 Monitors: $56.29

Currencies: €

32) Uptime Checker

Cheapest Plan: $6.00

10 Monitors: $15.00

100 Monitors: $50.00

Currencies: $

33) Turbo Monitoring

Cheapest Plan: $6.69

10 Monitors: $66.38

100 Monitors: n/a

Currencies: €

34) Server Guard 24

Cheapest Plan: $6.90

10 Monitors: $23.90

100 Monitors: $129.90

Currencies: $

35) Observu

Cheapest Plan: $6.95

10 Monitors: $14.99

100 Monitors: $169.00

Currencies: $

36) WatchSumo

Cheapest Plan: $7.00

10 Monitors: $21.00

100 Monitors: contact

Currencies: $

37) Pingrely

Cheapest Plan: $7.08

10 Monitors: $7.08

100 Monitors: $47.92

Currencies: $

38) Pingdom

Cheapest Plan: $7.95

10 Monitors: $7.95

100 Monitors: $131.00

Currencies: $ + contact

39) Uptime Doctor

Cheapest Plan: $7.95

10 Monitors: $7.95

100 Monitors: n/a

Currencies: $

40) Uptime

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $64.00

Currencies: $

40) Pinometer

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $64.00

Currencies: $

42) Anturis

Cheapest Plan: $8.00

10 Monitors: $8.00

100 Monitors: $76.00

Currencies: $

43) NodePing

Cheapest Plan: $8.00

10 Monitors: $15.00

100 Monitors: $15.00

Currencies: $

44) Are My Sites Up

Cheapest Plan: $8.00

10 Monitors: $32.00

100 Monitors: $192.00

Currencies: $

45) WebMon

Cheapest Plan: $8.33

10 Monitors: $33.33

100 Monitors: $79.17

Currencies: $

46) Ping Stack

Cheapest Plan: $9.00

10 Monitors: $9.00

100 Monitors: $49.00

Currencies: $

47) Uptimia

Cheapest Plan: $9.00

10 Monitors: $9.00

100 Monitors: $79.00

Currencies: $

48) Site 24x7

Cheapest Plan: $9.00

10 Monitors: $9.00

100 Monitors: $89.00

Currencies: $

49) Macro Wave

Cheapest Plan: $9.40

10 Monitors: $9.40

100 Monitors: n/a

Currencies: €

50) Host Tracker

Cheapest Plan: $9.92

10 Monitors: $9.92

100 Monitors: $74.92

Currencies: $

51) Alertra

Cheapest Plan: $9.95

10 Monitors: $9.95

100 Monitors: $20.00

Currencies: $

52) Monitor Fox

Cheapest Plan: $9.95

10 Monitors: $9.95

100 Monitors: $59.95

Currencies: $

53) Happy Apps

Cheapest Plan: $9.95

10 Monitors: $9.95

100 Monitors: $199.95

Currencies: $

54) Let’s Monitor

Cheapest Plan: $9.99

10 Monitors: $9.99

100 Monitors: $9.99

Currencies: $

55) Candum

Cheapest Plan: $9.99

10 Monitors: $9.99

100 Monitors: $89.99

Currencies: $

15 Jun 2021

**Update 17th June 2021** Scheduled maintenance was completed successfully today.

The work to upgrade the local network of our main server went well and was compleled faster than expected, in under 10 minutes. However, one issue occurred during the maintenance and some false positive downtime alerts were sent out to customers during this time (6am to 6.08am UTC) - these can safely be ignored.

Our main server's local network is scheduled for maintenance on 17th June 2021 at 6am UTC.

Our Upstream Provider will replace a top of rack switch with newer generation hardware capable of 40G networking. This is expected to take 15-20 minutes.

Although our server will remain running during this time the network will be down. Therefore our services will be paused for the duration of the maintenance and will resume immediately after maintenance is completed.

We hate downtime as much as anyone and we've had 100% uptime for over a year now so really dislike our perfect record being reduced.

However, we realise that this maintenance is essential and look forward to the performance benefits it will bring. Thanks for your patience with this.

10 Jun 2021

We've updated the phone number that sends text alerts to US customers to a new 10DLC number. You don't need to do anything, but if you're in the US you'll notice your SMS alerts come from a new number.

What Is 10DLC?

10DLC stands for '10 digit long code'. In layman's terms it's a phone number that is ten figures long.

These numbers are the new standard for 'application to person' text messages, where a message is automatically sent to a person by a computer.

Why The Change?

The 10DLC standard has been created to combat text message spam while allowing legitimate automated text messages to be received. It's not a regulation as such, in that it's not enforced by government. It's a standard set by US phone carriers, such as AT&T, T-Mobile etc.

Either way, it was essential for Downtime Monkey to get onboard to make sure our US text alerts continue to be received round the clock. So we've changed our US number to a 10 digit US toll free number - it's already in place and working well.

A big thanks to all our Pro Plan customers for keeping Downtime Monkey running, you're awesome!

02 Feb 2021

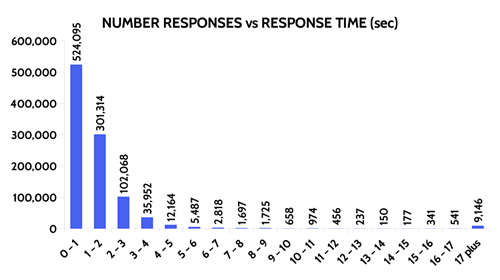

Downtime Monkey now logs more than 4 million website responses each day. We analysed these to see how fast websites respond in practice.

View the full results below or skip to the sound bites.

1 Million Response Times Analysed

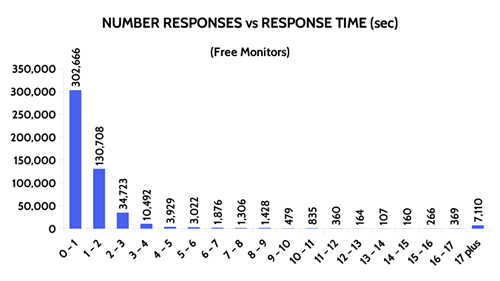

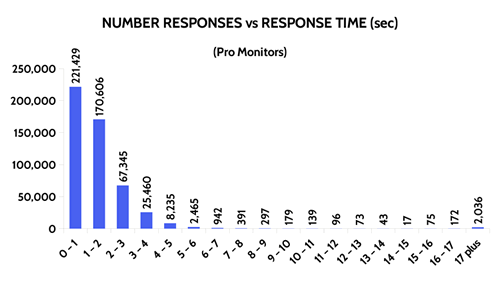

We collected 1 million response times from real websites. Half a million from Pro plans and half a million from Free plans.

We then split these into groups: 0 to 1 second, 1 to 2 seconds, 2 to 3 seconds etc. and then counted the number in each group. For responses slower than 17 seconds a site is considered down.

Here are the results:

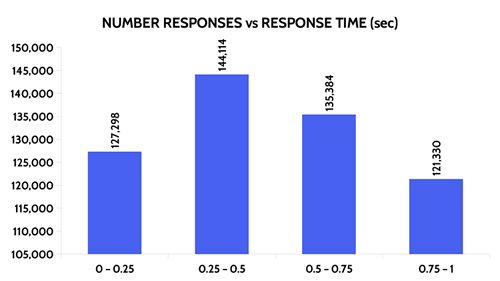

Fastest Websites Analysed

The majority of sites responded within a second, so we took another sample and broke the fastest sites into groups of a quarter of a second.

Free vs Pro

The Sound Bites

The fastest 10% of websites respond in under 0.2 seconds.

The average response time is 1.28 seconds.

Over 90% of sites respond within 3 seconds.

Nearly 1% of websites are down at any single moment.

If you would like to see how fast your website responds sign-up here or check out our features.

22 Oct 2020

Downtime Monkey has now been monitoring websites for just over 3 years. Since 2017, we have offered a 30% 'early-bird' discount on Pro subscriptions. But this is coming to an end very soon...

30% Discount Locked-in For 3 Years

Don't fret though, you can still access the discount now... but the offer will end in November.

Note that the discount is locked-in for 36 months from the date of purchase so if you buy a Pro Plan today you'll still receive the discount in October 2023.

Existing Pro Customers

If you're an existing Pro customer don't worry, you won't lose your discount because it's locked in for 36 months from your original purchase.

However, if you're intending to upgrade it may be worth doing this now to keep your 'early-bird' discount.

A big thank you to all the Pro users who have kept the service going since September 2017!

17 Aug 2020

The stats overview page that we launched in June was very well received. We have followed up on this and developed a similar idea for downtime logs. This went live on Friday and shows downtime records for all your monitors in one place.

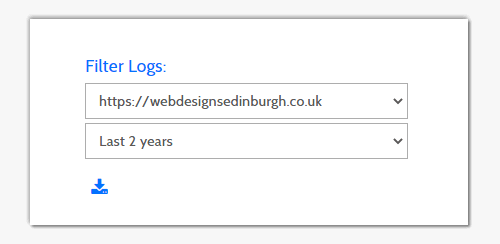

Filter Logs

By default, logs of all downtimes in the last 24 hours are shown. This means that you'll immediately see downtimes from any recent outages and get the important details at a glance.

It's possible to show logs for any site individually. This is really handy if you monitor a lot of websites and want to focus in on one particular site.

You can also change the timespan to see logs up to 2 years old: options are 24 hours, 7 days, 30 days, 90 days, 1 year and 2 years. You can view logs for all your monitors together for up to 30 days - for more than this select an individual site.

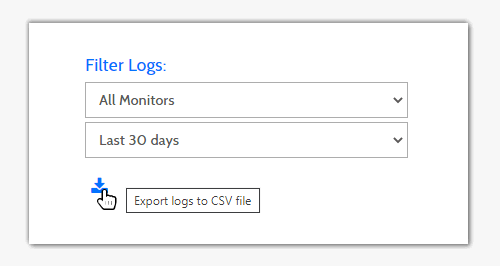

Download Logs

As well as viewing logs you can export logs to a spreadsheet (CSV file) in just one click. This is really useful if you want to send logs to a customer, provide evidence of downtimes to a hosting provider or include logs in a company report.

View Timestamps in your Timezone

In every log, timestamps of the start and end of the downtime are shown. These are displayed in your preferred timezone and exactly match the timestamp shown in the corresponding downtime alerts.

This makes it incredibly easy to cross-reference a log with a particular alert as shown in this recent post on timestamps on downtime alerts.

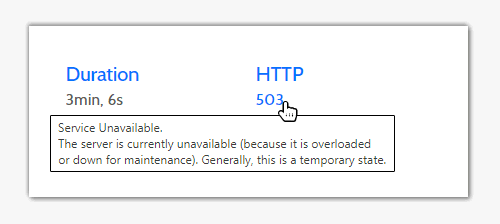

See the Reason For Every Downtime

The HTTP code is shown in each log and you can hover on this to see the cause of the downtime in plain English. This can help you troubleshoot problems and get your website back online quickly.

A big thank you to everyone using Downtime Monkey and especially to our Pro users - it wouldn't be possible without you!

All Posts

Website Monitoring Prices Compared

Scheduled Maintenance 17th June 2021

US Text Alerts Updated For 10DLC

A Quick Study Of Response Time

'Early-bird' Discount Ends November

Downtime Logs... All In One Place

The Effects Of COVID-19 Lockdowns

Lockdown Bugfixes & Midnight Coding

Monitoring URLs With Query Strings

New Pro Plans For EU Individuals

Free & Pro Monitoring Compared

Downtime Alerts: An Ideal Custom Setup

Server Upgrade & IP Address Change

Website Monitoring: Cheap vs Free

Website Content (Keyword) Monitoring

Cheap Website Monitoring Pro Plans

Server Upgrade Scheduled Completed

Whitelist Email Addresses in cPanel

Website Downtime Alerts To Slack

Whitelist Email Addresses: Thunderbird

Whitelist Email Addresses in Yahoo Mail

How we improved accessibility by 42%

Whitelist Email Addresses in Outlook

Whitelist Email Addresses In Gmail

Why Whitelist An Email Address?

When is a website considered down

Bulk import, edit and delete monitors

Privacy, democracy & bureaucracy

How Much Downtime is Acceptable?

Server Upgrade Scheduled Completed

Free Plan Upgraded to 60 Monitors

New Feature: Rate Limit SMS Alerts

How We Boosted Page Speed By 58%

How To Reduce Website Downtime